Assignment 5: Creating 3D Models

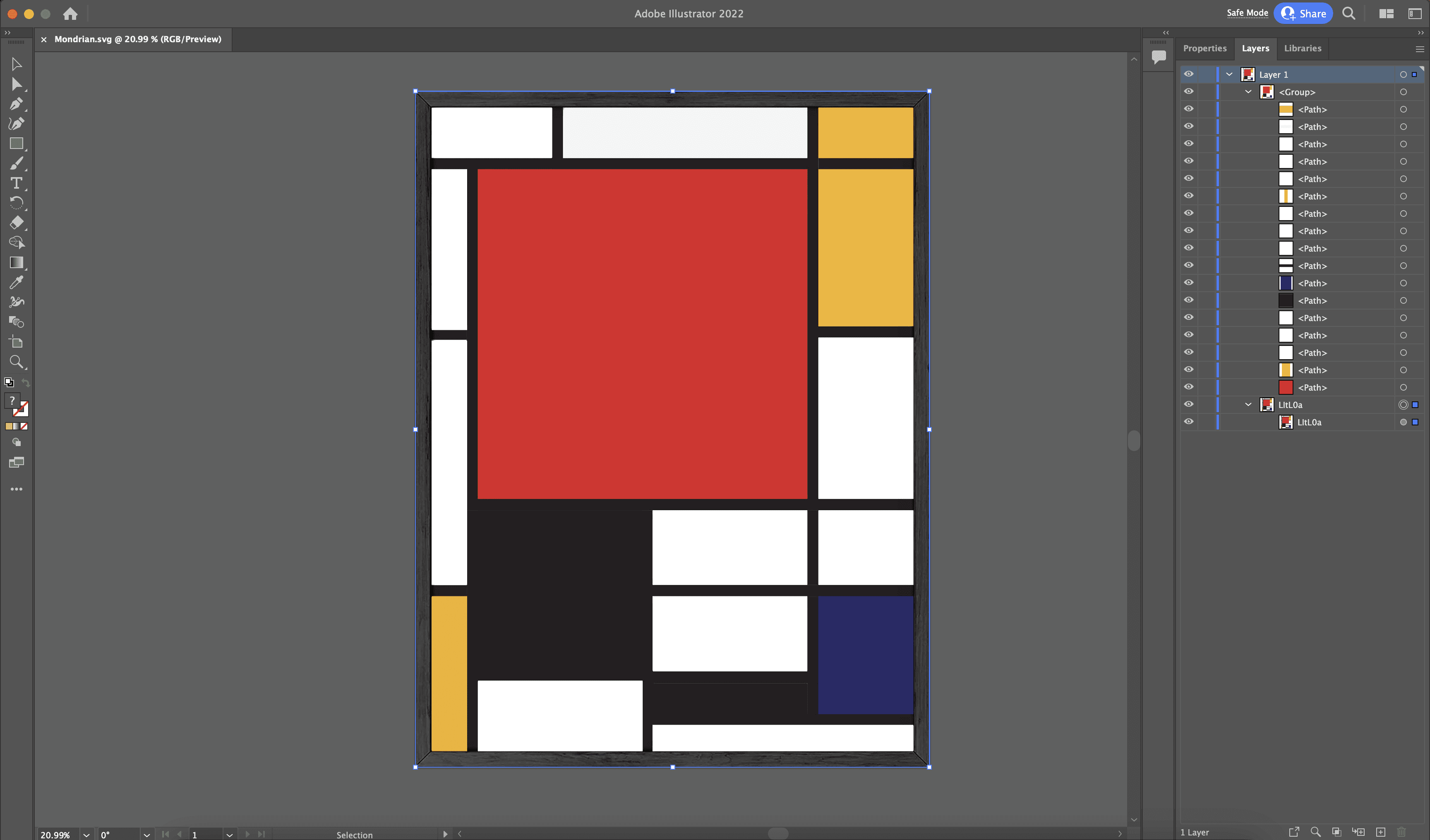

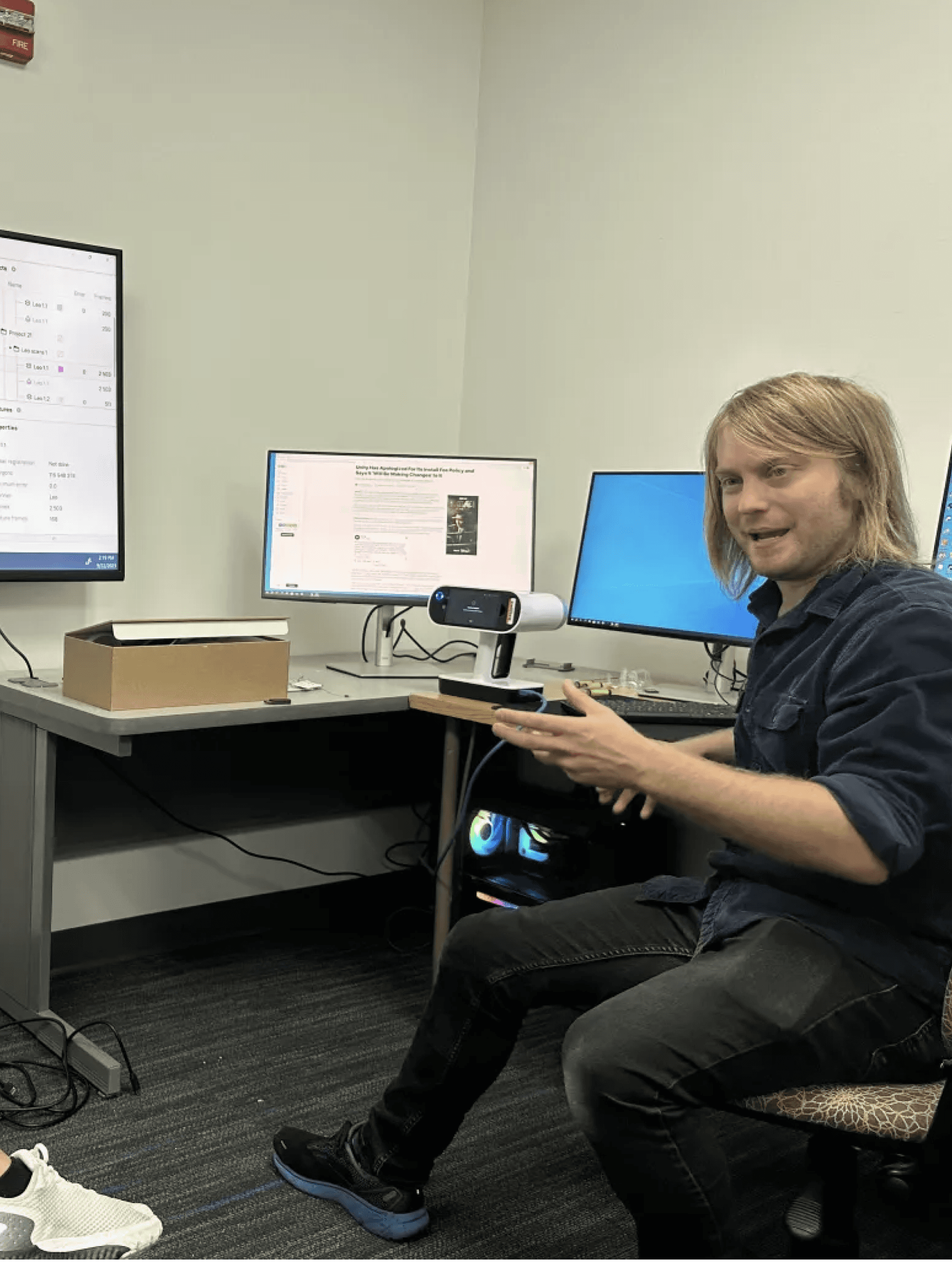

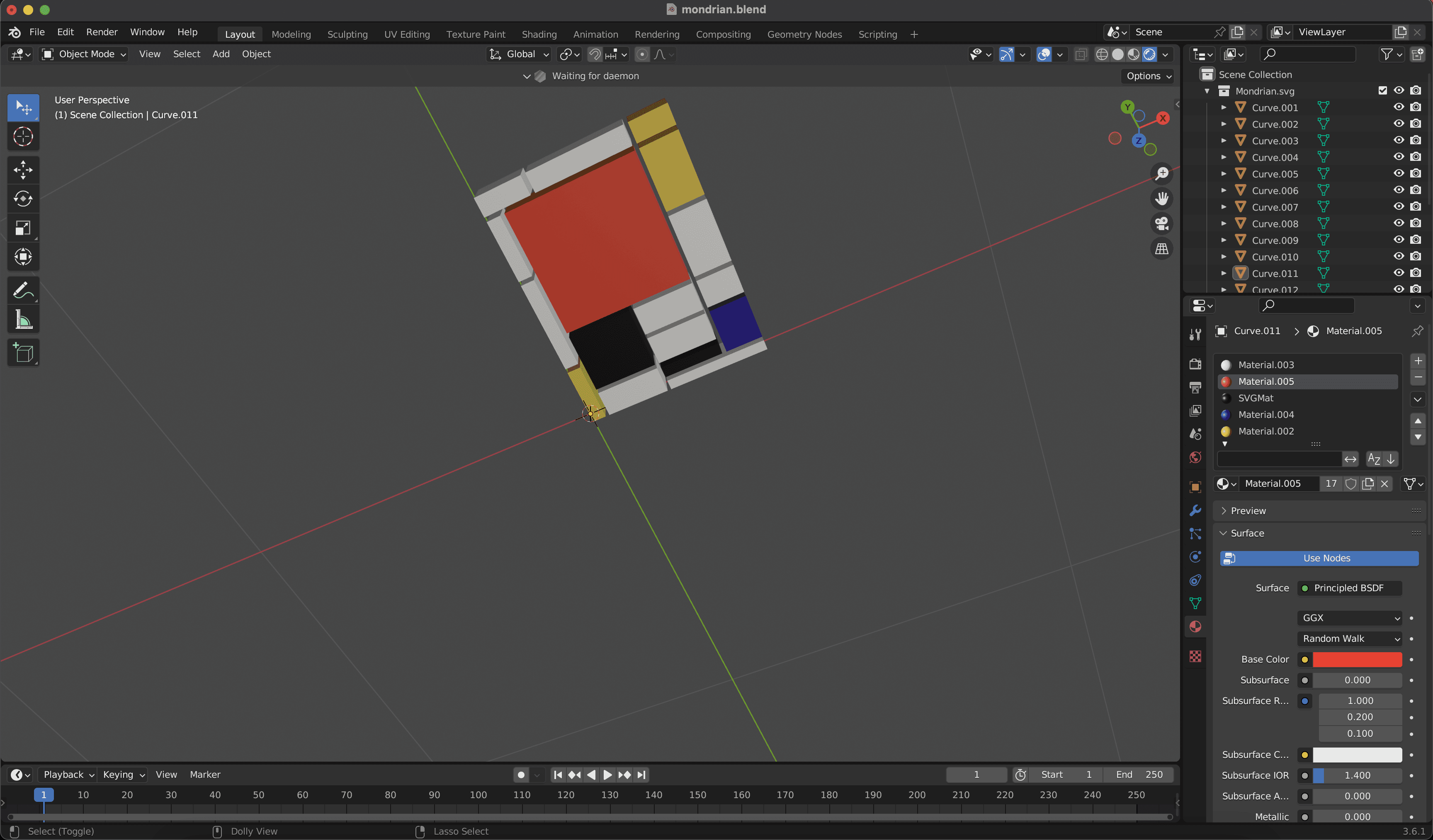

For 3D models, we began by working on artworks with simple shapes. I practiced creating a 3D model of the Mondrian by first tracing each shape in Adobe Illustrator and then extruding the objects in Blender to create the model. Below is my practice model.

Throughout the learning during the Fall 2023 semester, it taught me so much more about considering design. It taught me how much spatial awareness can play a role in creating an excellent or poor experience and how audio can transform something boring into something magical. It also taught me that not everything is always going to work out. With how new Augmented Reality is, there isn’t a 'defined' principle on how to approach things, and being able to experience something new is both challenging and rewarding as I continue to discover and learn how to become a better designer every day.

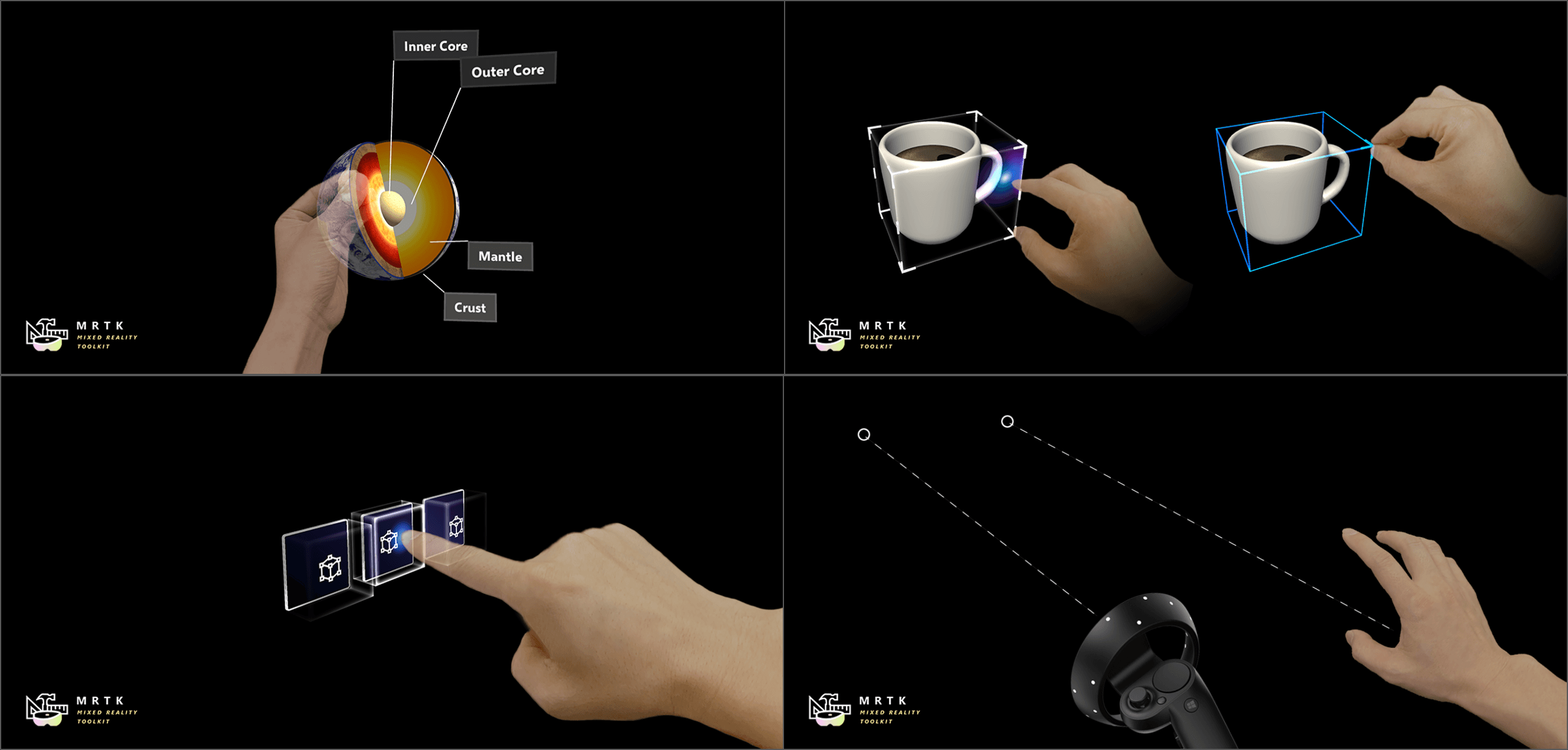

Assignment 6: Creating a Unity Scene with the Microsoft HoloLens 2

With the 3D model and assistance from my professor's C# script, we utilized the Mixed Reality Toolkit to create a touch-to-text system that notifies visually impaired users when they touch a certain area of the artwork. As this is our initial prototype, we designed it to describe the color of each section they are hovering over to keep it simple.

After developing a potential solution, we then needed to learn the necessary skills to build out this concept. By practicing each step along the way, it helped me gain knowledge of how 3D scanning and spatial awareness will play a role in developing this software. Another key aspect is sound and how spatial audio and text-to-speech will make everything come to life, as this will be one of the main communication methods for conveying what the art piece is displaying.

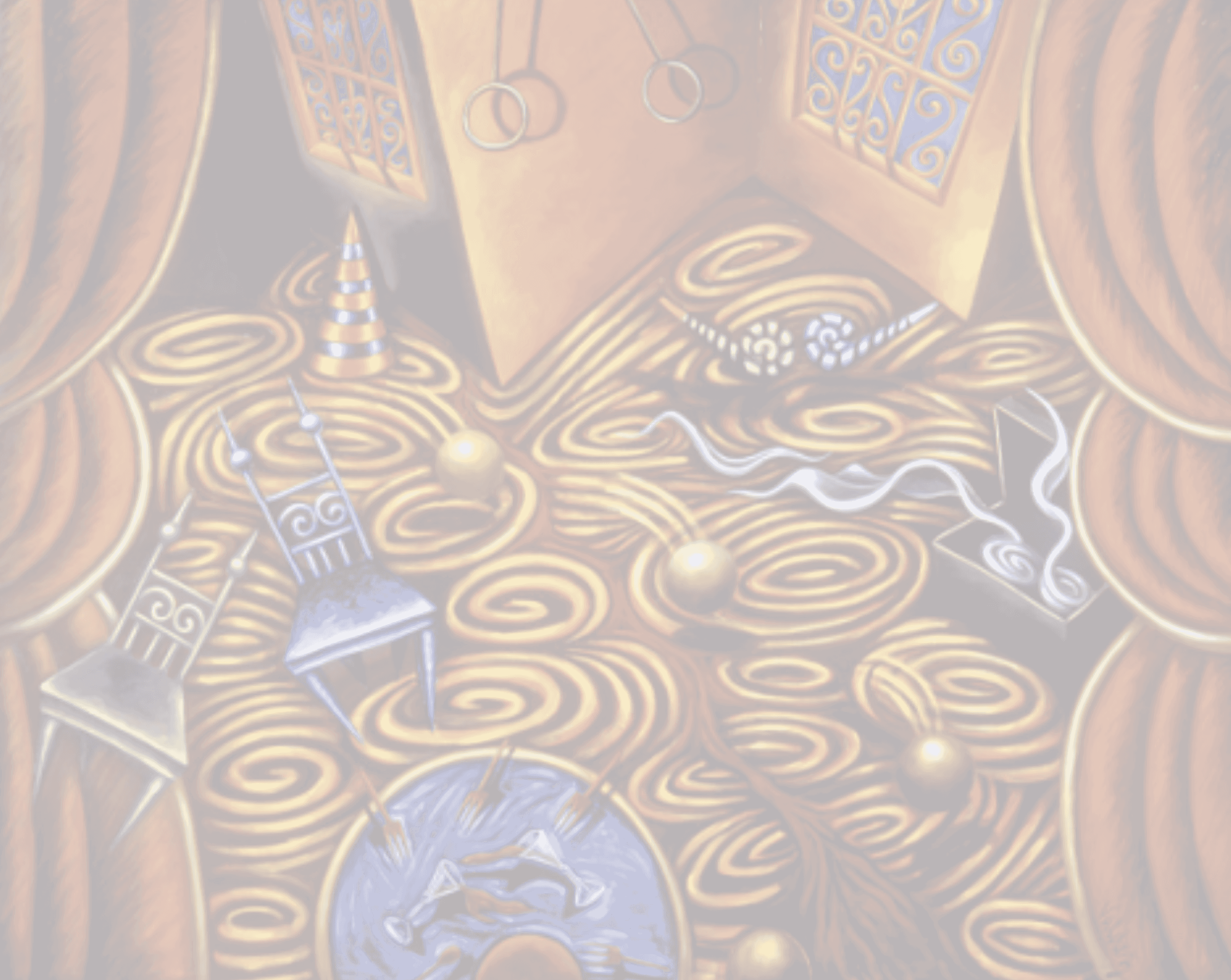

Our first assignment was very simple, but it plays a crucial part in learning how to visually describe artworks. Through taking multiple art classes and participating in design critiques, I found it very helpful when it came to this assignment, as we practiced how to describe an image both generally and in different areas. Here is an example of my descriptions:

Assignment 1: Image Descriptions

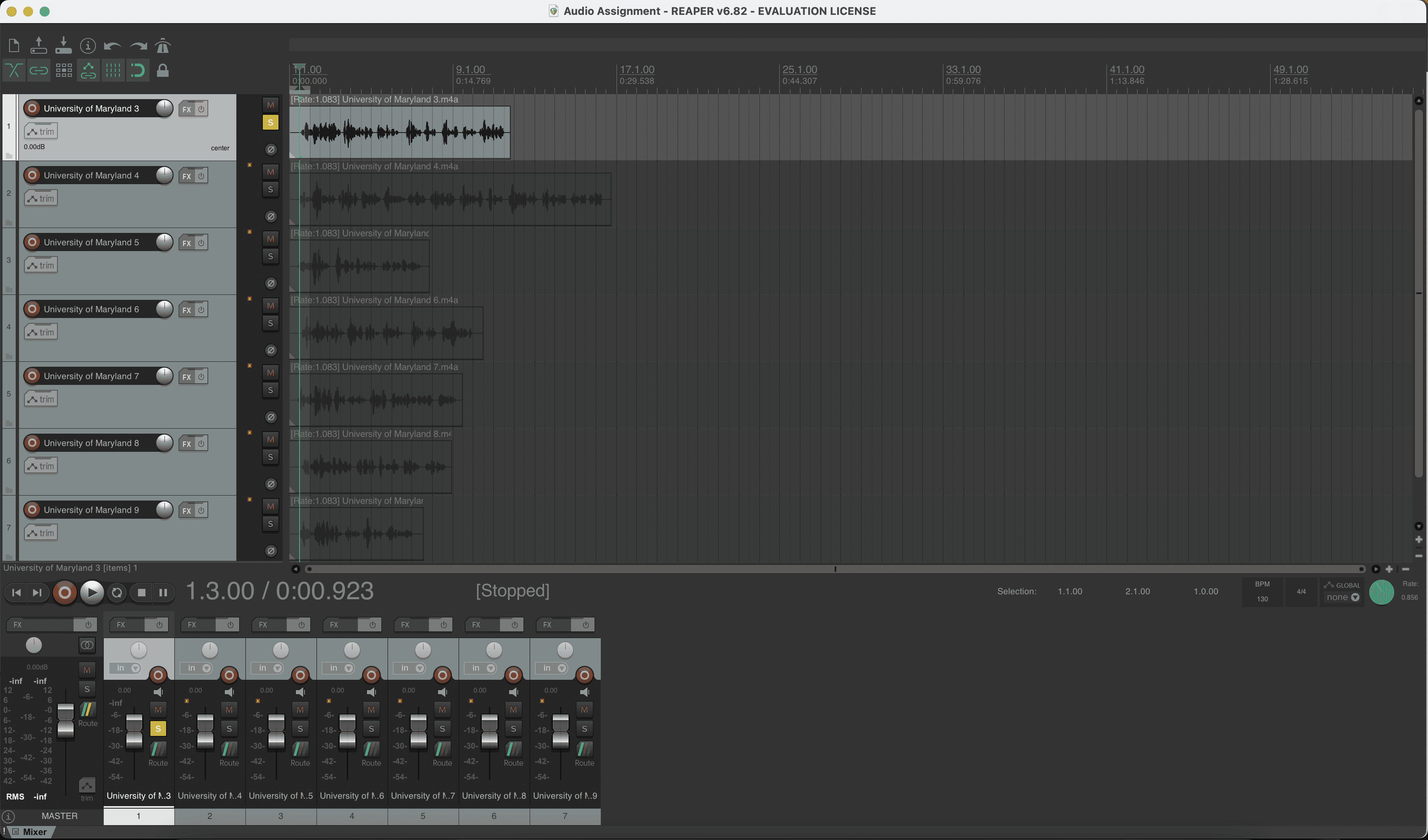

Our second assignment involved learning the basics of recording audio within Reaper. This gave us a unique perspective on how to record our voice for art descriptions and how to incorporate audio in our work moving forward.

Assignment 2: Audio Descriptions with Reaper

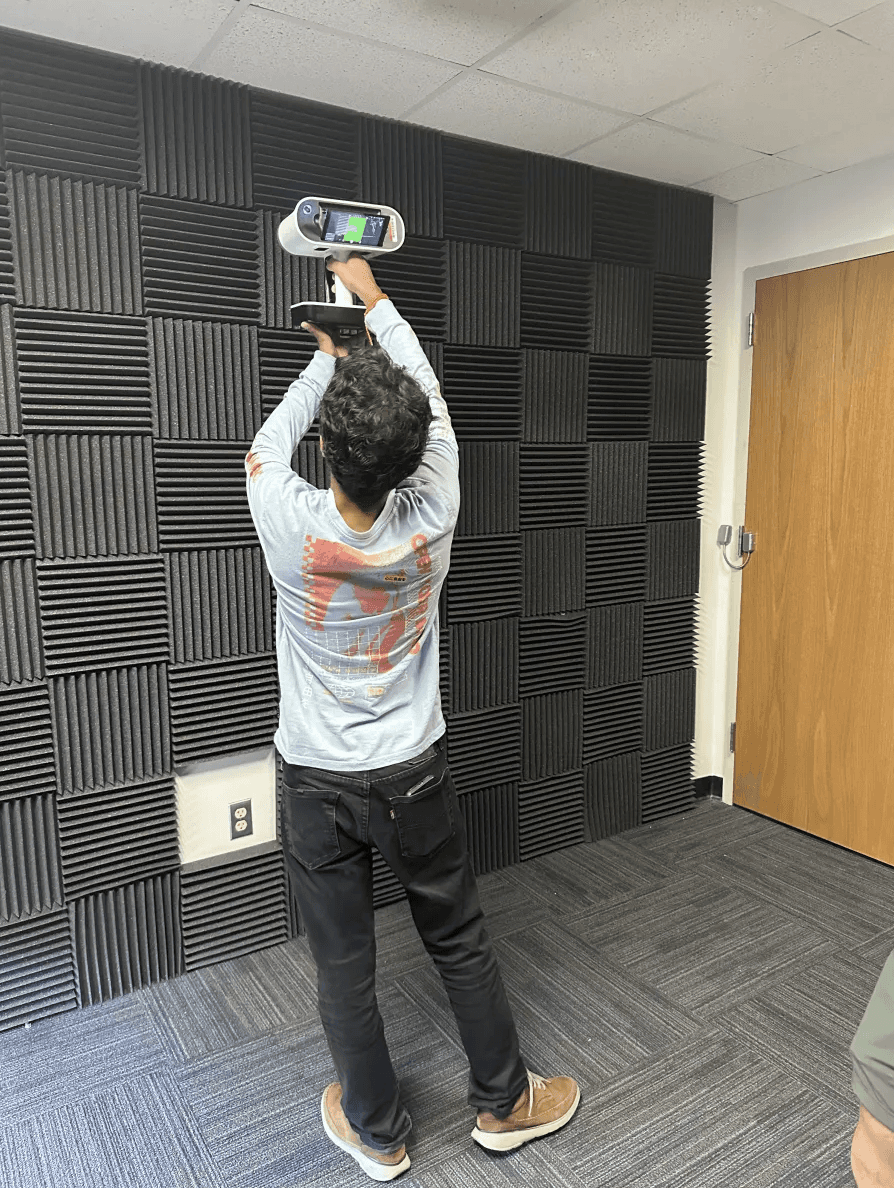

Our third assignment taught us the concept of photogrammetry and how we can use multiple photos/scans to combine them into one model. We also had the privilege of using the Artec Leo, an advanced 3D handheld scanner, to practice scanning and capturing future sculptures. Although the Artec Leo provided the most accurate and realistic scans, the scanner itself emitted too many flashes during the scan, potentially causing shading issues when scanning sculptures in person. Due to this, we decided to capture future work using regular cameras instead.

Assignment 3: Photogrammetry with Artec Leo

From a Distance: From afar, the image resembles a bowl of spaghetti surrounded by objects. Circular yellow shapes with a hint of brownish-red create the illusion of pasta in sauce. The circular objects look like meatballs in the dish. The red outline could be a bowl, the chairs might be forks, and the smaller details seem unidentifiable.

From Closer Up: As you get closer, the spaghetti-like image becomes clearer. It appears to be a carpet on the floor. Green and blue chairs sit on top of it. The red outline now looks like curtains, suggesting a theater or show. There's a building with windows in the middle background, and the bottom middle circle resembles a pool with forks, glasses, and more. A traffic cone on the upper left suggests caution. On the right, there's a chest with something airy or ribbon-like coming out, matching the floor's style.

Center: In the center, you can see the spaghetti-like floor in more detail. The artist used yellows, reds, and blacks to create the circular effect. The "meatballs" now appear as balls in motion on the floor.

Top Left: From the top left, you see reds, yellows, and blacks creating the curtain effect. The dark background of the house signifies darkness, with a bright window as a focal point. The traffic cone hints at something starting. The transition from the dark background to the spaghetti floor is abrupt.

Top Right: The top right view offers a better look at a wall's side. Two walls give depth to the image, making it more 3D. The curtains maintain their style. The floor has candy cane-like patterns in greenish yellows and blueish purples, introducing a unique element.

Bottom Left: The bottom left view reveals green and blue chairs following the floor's swirl pattern. A blue pool with red forks, plates, spoons, and glasses, seemingly pouring red liquid, is a distinctive focus area.

Bottom Right: From the bottom right, you see the spaghetti floor with a red-black root, suggesting a disruption. On the far right, the curtains return, and more balls head towards this mysterious root.

4

6

7

5

1

2

3

Assignment 4: Photogrammetry at the National Gallery of Art

Learnings

IDEATION

After our first few assignments/trainings, we then took a class field trip to the National Gallery of Art! During this time, we used cameras to practice photogrammetry on different paintings and sculptures. Our plan was to next put all the photos together in Reality Capture to form the piece; however, we encountered multiple difficulties as some of the true colors were not picked up correctly. Due to this, we decided to test out art pieces that were located on their websites so we could focus on building the actual prototype first.

More Coming Soon...

Enhanced Immersion: Haptics simulate the sense of touch, allowing users to feel virtual objects as if they were real. This enhances the sense of immersion by providing a more complete sensory experience.

Improved Interaction: With haptic feedback, users can better interact with virtual objects by receiving tactile cues when they touch or manipulate them. This makes interactions more intuitive and realistic.

Spatial Awareness: Haptics can provide spatial cues that help users understand the position, size, and texture of virtual objects within the environment. This improves spatial awareness and helps users navigate and interact with the virtual world more effectively.

Feedback and Response: Haptic feedback can convey information about actions and events in the virtual environment, such as confirming a button press or indicating when an object has been grabbed or manipulated. This feedback loop enhances user engagement and responsiveness.

Safety and Comfort: Haptics can also provide feedback that enhances safety and comfort in MR experiences. For example, haptic cues can warn users of virtual obstacles or alert them to potential dangers, improving overall user comfort and reducing the risk of motion sickness.

Audio Immersion: With the haptics, you can connect a pair of headphones and just use the haptic controllers to create a sense of immersion without the need of the headset.

Benefits of Haptics

Question: Why are haptics important for visually impaired users?

Why: It improves people’s interactions by providing a better sense of immersion and interaction, as well as an overall better understanding of their surroundings and how to interact with virtual objects.

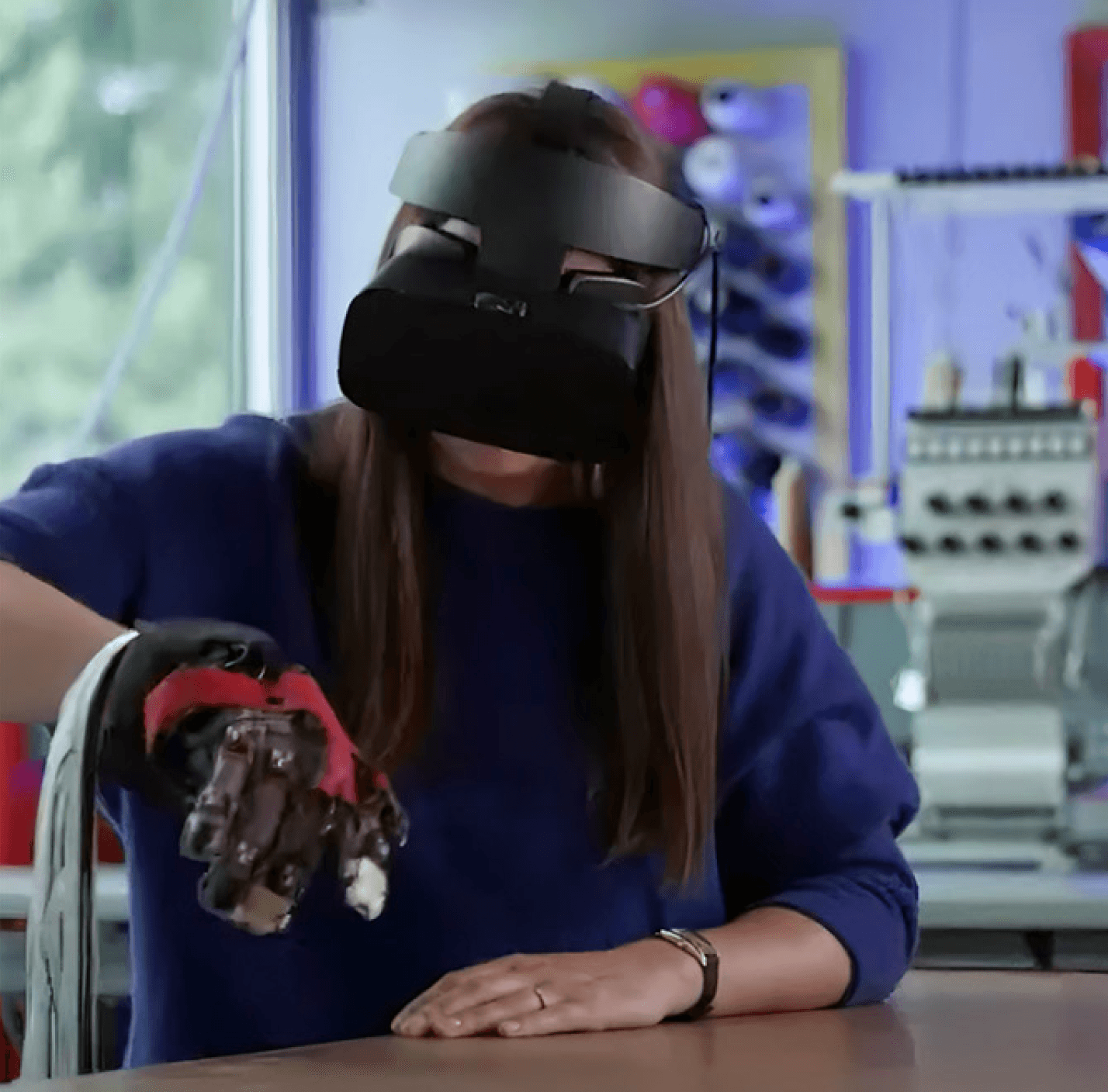

What: A new haptic feedback feature has been added to the project specifically for the Quest 3. This feature utilizes controllers for navigation within the experience and aims to improve their sensory perception of artworks and their textures.

How: Utilizing the Quest 3 headset along with Meta Haptics Studio and TruTouch technology.

Who: Visually impaired users at the museums

Guideline for Haptics

After reflecting on the past two semesters with the user group, conducting research within the space of spatial awareness and audio, and much more, our initial prototypes were being presented to different art galleries/museums. Our next task was to decide our goals for the spring 2024 semester to improve on our prototype. With my focus on human-centered design and the idea of relating to human connections of sensitivity, I decided to focus on haptics for the remainder of my work on the research project. One thing I noticed is that using key senses such as hearing and touch helps many people understand where they are and what they are feeling. However, without haptics, it doesn’t fully immerse the user within the artwork. With the use of haptics and controllers, I conducted initial research on how haptics can be a part of a comprehensive solution for our next steps while utilizing the Meta Quest 3.

IDEATION

Haptics

SpatialSense

AR Research Study

Individual research study overview

PROCESS HIGHLIGHTS

Timeline

Mar 2023 - May 2024

Responsibilities

Tools

UX Research

Design Thinking

Unity

Blender

Adobe Illustrator

Reaper

3D Prototyping

AR Development

Will Le

Ian McDermott

Ben Margolin

Eva Ginns

Team

Challenge

How can we enhance the experience of visually impaired users within the realm of art?

Changing the way visually impaired users interact with artworks through the use of Augmented Reality.

Opportunity

AJ Kaladi

Problem Discovery

BACKGROUND

The project's primary objective is to address the challenge of providing visually impaired individuals with autonomous access to visual artworks by leveraging augmented reality (AR) as a sensory substitution method. It will focus on developing a HoloLens application that enables users to comprehend visual artworks independently. Students involved in the project will explore a range of Immersive Media technologies, including 3D scanning, photogrammetry, generative music, hand tracking, holography, and AR head-mounted displays. The project will utilize software tools such as Reality Capture, Unity, and the Mixed Reality toolkit, with a particular emphasis on integrating accessibility features like sensory substitution and visual description.

The Process

Research

User Interviews

Desk Research

Haptics

Ideation

Brainstorming Solutions

Learnings

Final Designs

Prototype Demo

Reflection

Next Steps

1

2

3

4

User Interviews

RESEARCH

Before officially starting my independent study with Ian and my other classmates, I initially conducted user interviews to learn more about how Augmented Reality can help visually impaired users in general for another class. Based on these initial findings, I highlighted my key takeaways from industry professionals, individuals facing personal difficulties, and other research papers.

David Weintrop’s Insights on Design Thinking:

Stressed user-centric design approach.

Advocated for thorough research on user needs and wants.

Emphasized iterative design process and continuous improvement.

DORS (Deaf Services Division of Rehabilitation Services):

Addressed challenges faced by Deaf individuals in job seeking.

Highlighted the importance of soft skills and resume development.

Noted reliance on interpreters and VRI technology for communication.

Berkeley's Augmented Reality Study for Visually Impaired People:

Explored the potential of AR to enhance daily life for visually impaired individuals.

Detailed features like virtual maps, text recognition, and surroundings perception.

Highlighted successful features such as "spotlight mode," "proximity mode," and "capture text."

Critiqued limitations including high cost of hardware like Microsoft HoloLens.

Desk Research

RESEARCH

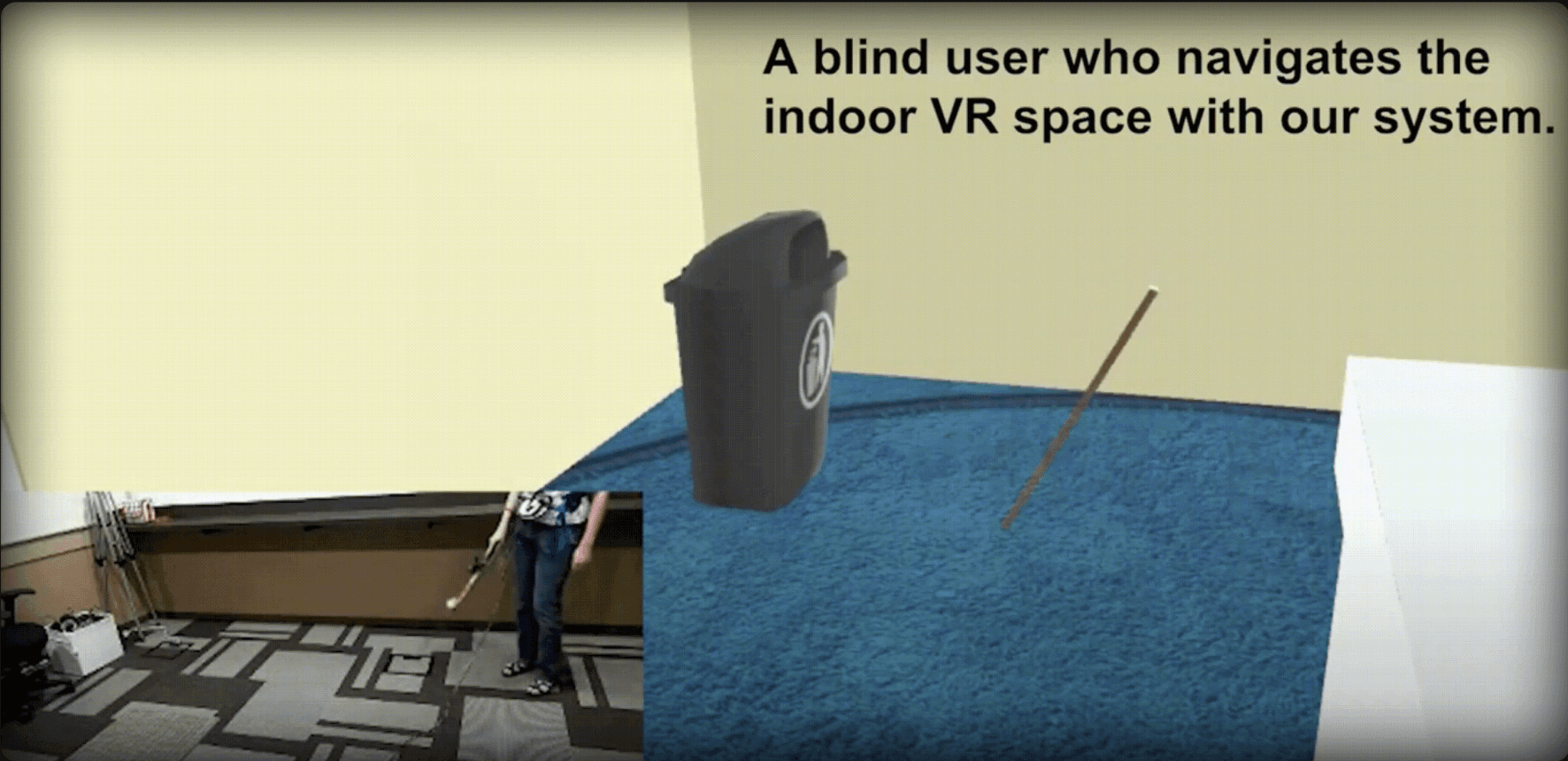

After conducting the interviews, I also wanted to explore how other companies and technologies implement detection systems to gain a better understanding of how Augmented Reality, cameras, and knowledge of spatial audio and awareness play a role in the development process. Below are the notes I recorded and some of the pros and cons of these different systems.

Tesla

Ultrasonic sensors: Tesla vehicles are equipped with multiple ultrasonic sensors positioned around the car to detect objects in close proximity.

Object detection: The ultrasonic sensors emit high-frequency sound waves and measure their reflection off nearby objects to determine their distance and location relative to the vehicle.

Parking assistance: Tesla's proximity detection system assists drivers in parking by providing visual and audible alerts when the vehicle approaches obstacles such as walls, curbs, or other vehicles.

Collision avoidance: The system helps prevent collisions by alerting the driver or automatically applying brakes if an object is detected too close to the vehicle, especially during low-speed maneuvers.

Summon feature: Tesla's Summon feature allows the vehicle to autonomously maneuver in and out of parking spaces. The proximity detection system plays a crucial role in ensuring the safety of this feature by detecting nearby obstacles.

Autopilot functionality: Tesla's Autopilot system utilizes proximity detection alongside other sensors like cameras and radar to enable semi-autonomous driving capabilities, including adaptive cruise control, lane-keeping assistance, and automatic lane changes.

Enhanced safety: By constantly monitoring the vehicle's surroundings, the proximity detection system enhances overall safety by providing real-time alerts and assisting the driver in avoiding potential collisions.

Continuous improvement: Tesla frequently updates its software to enhance the performance and capabilities of its proximity detection system, leveraging advancements in sensor technology and artificial intelligence algorithms.

High Accuracy: Ultrasonic sensors provide precise measurements of object distances, enhancing safety for both Tesla drivers and pedestrians.

Real-time Alerts: Immediate visual and audible alerts help drivers avoid collisions and navigate tight spaces effectively.

Autonomous Features: Features like parking assistance and Summon utilize proximity detection to automate vehicle maneuvers, reducing the driver's workload.

Enhanced Safety: Constant monitoring of surroundings enhances overall safety by providing proactive alerts and assistance.

Continuous Improvement: Tesla's frequent software updates ensure that the system remains up-to-date with the latest advancements, improving performance and capabilities over time.

Dependency on Sensors: The system relies heavily on sensors, which may not always accurately detect all objects or obstacles, especially in complex environments.

Limited to Tesla Vehicles: The benefits of the proximity detection system are only available to Tesla vehicle owners, limiting its accessibility.

Complexity for Visually Impaired Users: While the system enhances safety for sighted users, its visual and auditory alerts may not be accessible or useful for visually impaired individuals.

Cost: The advanced technology integrated into Tesla vehicles can result in higher costs, making it inaccessible to some users.

Potential Over reliance: Drivers may become overly reliant on the system's capabilities, leading to complacency or reduced vigilance while driving.

Pros

Cons

Walking Cane

Traditional White Cane Simulation: Replicates traditional white cane behavior using haptic feedback. Emulates the tactile sensation of cane-to-obstacle contact.

Obstacle Detection: Laser sensor detects obstacles within a 1-meter range. Short pulse indicates obstacle presence.

Haptic Feedback: Utilizes varying haptic pulses for obstacle detection and absence. Long vibration on-demand provides obstacle distance information.

Safety Function: Intense, continuous vibration alerts users to obstacles within 30 cm.

User Interaction: Mimics white cane exploration by pointing the device. Non-intensive feedback allows users to gather more information on-demand.

Prototype Hardware: Includes microcontroller, laser sensor, electromagnetic coil, vibration motor, and push button. Designed for portability and ease of use, resembling a traditional white cane.

Limited Range: The system's obstacle detection range is limited to 1 meter, potentially missing objects or obstacles beyond this distance.

Single Sensory Modality: The system relies solely on haptic feedback, which may not be sufficient for providing comprehensive environmental information to users, especially in complex or dynamic environments.

Dependency on Hardware: Users must carry and use the physical device to benefit from its functionality, which may be cumbersome or inconvenient in certain situations.

Learning Curve: Users may require training to effectively interpret the haptic feedback provided by the system, especially if they are unfamiliar with similar assistive technologies.

Cost: Developing and maintaining the hardware components of the walking cane system may incur significant costs, potentially limiting its accessibility to some users.

Tactile Feedback: The haptic feedback provided by the walking cane system offers immediate tactile information about obstacles, allowing users to navigate safely and independently.

Obstacle Detection: The laser sensor detects obstacles within a 1-meter range, providing real-time feedback to the user, which is crucial for avoiding collisions.

Safety Function: The intense, continuous vibration alerts users to obstacles within a close range (within 30 cm), providing an additional layer of safety.

User Interaction: Mimicking the traditional white cane exploration, the device allows users to interact with their surroundings in a familiar way, promoting ease of use and adoption.

Portability: The prototype hardware is designed to be portable and resembles a traditional white cane, ensuring that users can carry and use it comfortably.

Pros

Cons

Brainstorming Solutions

IDEATION

After considering the initial research and desk research, we developed our initial solution using the Microsoft HoloLens 2 with the Mixed Reality Toolkit in Unity. With the toolkit, our solution is to create realistic 3D models and a C# script that will allow the user to use their hands to “touch” the object. As they touch it, our description of the object would be played. With this, we have to keep in mind the sizing and placement of the model from the user.